How We Got Here

Over the past decade, many campuses have been building up their surveillance arsenal and inviting a greater police presence on campus. EFF and fellow privacy and speech advocates have been clear that this is a dangerous trend that chills free expression and makes students feel less safe, while fostering an adversarial and distrustful relationship with the administration.

Many tools used on campuses overlap with the street-level surveillance used by law enforcement, but universities are in a unique position of power over students being monitored. For students, universities are not just their school, but often their home, employer, healthcare provider, visa sponsor, place of worship, and much more. This reliance heightens the risks imposed by surveillance, and brings it into potentially every aspect of students’ lives.

Putting together a security plan is an essential first step to protect yourself from surveillance.

EFF has also been clear for years: as campuses build up their surveillance capabilities in the name of safety, they chill speech and foster a more adversarial relationship between students and the administration. Yet, this expansion has continued in recent years, especially after the COVID-19 lockdowns.

This came to a head in April, when groups across the U.S. pressured their universities to disclose and divest their financial interest in companies doing business in Israel and weapons manufacturers, and to distance themselves from ties to the defense industry. These protests echo similar campus divestment campaigns against the prison industry in 2015, and the campaign against apartheid South Africa in the 1980s. However, the current divestment movement has been met with disroportionate suppression and unprecedented digital surveillance from many universities.

This guide is written with those involved in protests in mind. Student journalists covering protests may also face digital threats and can refer to our previous guide to journalists covering protests.

Campus Security Planning

Putting together a security plan is an essential first step to protect yourself from surveillance. You can’t protect all information from everyone, and as a practical matter you probably wouldn’t want to. Instead, you want to identify what information is sensitive and who should and shouldn’t have access to it.

That means this plan will be very specific to your context and your own tolerance of risk from physical and psychological harm. For a more general walkthrough you can check out our Security Plan article on Surveillance Self-Defense. Here, we will walk through this process with prevalent concerns from current campus protests.

What do I want to protect?

Current university protests are a rapid and decentralized response to what the UN International Court of Justice ruled as a plausible case of genocide in Gaza, and to the reported humanitarian crisis in occupied East Jerusalem and the West Bank. Such movements will need to focus on secure communication, immediate safety at protests, and protection from collected data being used for retaliation—either at protests themselves or on social media.

At a protest, a mix of visible and invisible surveillance may be used to identify protesters. This can include administrators or law enforcement simply attending and keeping notes of what is said, but often digital recordings can make that same approach less plainly visible. This doesn't just include video and audio recordings—protesters may also be subject to tracking methods like face recognition technology and location tracking from their phone, school ID usage, or other sensors. So here, you want to be mindful of anything you say or anything on your person, which can reveal your identity or role in the protest, or those of fellow protestors.

This may also be paired with online surveillance. The university or police may monitor activity on social media, even joining private or closed groups to gather information. Of course, any services hosted by the university, such as email or WiFi networks, can also be monitored for activity. Again, taking care of what information is shared with whom is essential, including carefully separating public information (like the time of a rally) and private information (like your location when attending). Also keep in mind how what you say publicly, even in a moment of frustration, may be used to draw negative attention to yourself and undermine the cause.

However, many people may strategically use their position and identity publicly to lend credibility to a movement, such as a prominent author or alumnus. In doing so they should be mindful of those around them in more vulnerable positions.

Who do I want to protect it from?

Divestment challenges the financial underpinning of many institutions in higher education. The most immediate adversaries are clear: the university being pressured and the institutions being targeted for divestment.

However, many schools are escalating by inviting police on campus, sometimes as support for their existing campus police, making them yet another potential adversary. Pro-Palestine protests have drawn attention from some federal agencies, meaning law enforcement will inevitably be a potential surveillance adversary even when not invited by universities.

With any sensitive political issue, there are also people who will oppose your position. Others at the protest can escalate threats to safety, or try to intimidate and discredit those they disagree with. Private actors, whether individuals or groups, can weaponize surveillance tools available to consumers online or at a protest, even if it is as simple as video recording and doxxing attendees.

How bad are the consequences if I fail?

Failing to protect information can have a range of consequences that will depend on the institution and local law enforcement’s response. Some schools defused campus protests by agreeing to enter talks with protesters. Others opted to escalate tensions by having police dismantle encampments and having participants suspended, expelled, or arrested. Such disproportionate disciplinary actions put students at risk in myriad ways, depending how they relied on the institution. The extent to which institutions will attempt to chill speech with surveillance will vary, but unlike direct physical disruption, surveillance tools may be used with less hesitation.

The safest bet is to lock your devices with a pin or password, turn off biometric unlocks such as face or fingerprint, and say nothing but to assert your rights.

All interactions with law enforcement carry some risk, and will differ based on your identity and history of police interactions. This risk can be mitigated by knowing your rights and limiting your communication with police unless in the presence of an attorney.

How likely is it that I will need to protect it?

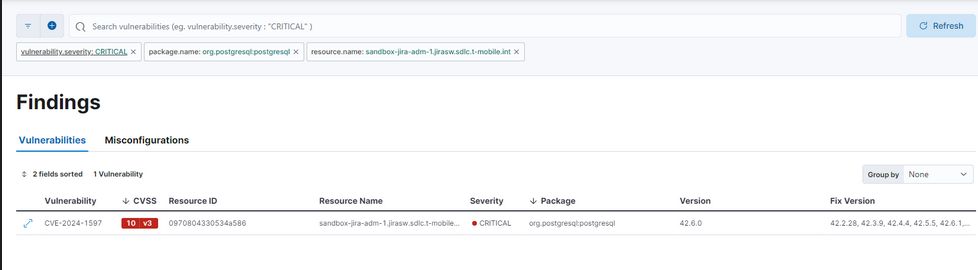

Disproportionate disciplinary actions will often coincide with and be preceded by some form of surveillance. Even schools that are more accommodating of peace protests may engage in some level of monitoring, particularly schools that have already adopted surveillance tech. School devices, services, and networks are also easy targets, so try to use alternatives to these when possible. Stick to using personal devices and not university-administered ones for sensitive information, and adopt tools to limit monitoring, like Tor. Even banal systems like campus ID cards, presence monitors, class attendance monitoring, and wifi access points can create a record of student locations or tip off schools to people congregating. Online surveillance is also easy to implement by simply joining groups on social media, or even adopting commercial social media monitoring tools.

Schools that invite a police presence make their students and workers subject to the current practices of local law enforcement. Our resource, the Atlas of Surveillance, gives an idea of what technology local law enforcement is capable of using, and our Street-Level Surveillance hub breaks down the capabilities of each device. But other factors, like how well-resourced local law enforcement is, will determine the scale of the response. For example, if local law enforcement already have social media monitoring programs, they may use them on protesters at the request of the university.

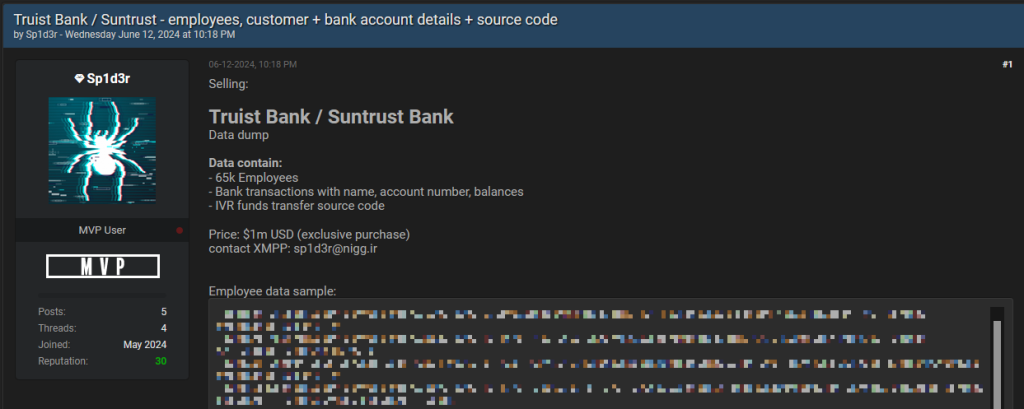

Bad actors not directly affiliated with the university or law enforcement may be the most difficult factor to anticipate. These threats can arise from people who are physically present, such as onlookers or counter-protesters, and individuals who are offsite. Information about protesters can be turned against them for purposes of surveillance, harassment, or doxxing. Taking measures found in this guide will also be useful to protect yourself from this potentiality.

Finally, don’t confuse your rights with your safety. Even if you are in a context where assembly is legal and surveillance and suppression is not, be prepared for it to happen anyway. Legal protections are retrospective, so for your own safety, be prepared for adversaries willing to overstep these protections.

How much trouble am I willing to go through to try to prevent potential consequences?

There is no perfect answer to this question, and every individual protester has their own risks and considerations. In setting this boundary, it is important to communicate it with others and find workable solutions that meet people where they’re at. Being open and judgment-free in these discussions make the movement being built more consensual and less prone to abuses. Centering consent in organizing can also help weed out bad actors in your own camp who will raise the risk for all who participate, deliberately or not.

Keep in mind that nearly any electronic device you own can be used to track you, but there are a few steps you can take to make that data collection more difficult.

Sometimes a surveillance self-defense tactic will invite new threats. Some universities and governments have been so eager to get images of protesters’ faces they have threatened criminal penalties on people wearing masks at gatherings. These new potential charges must now need to be weighed against the potential harms of face recognition technology, doxxing, and retribution someone may face by exposing their face.

Privacy is also a team sport. Investing a lot of energy in only your own personal surveillance defense may have diminishing returns, but making an effort to educate peers and adjust the norms of the movement puts less work on any one person has a potentially greater impact. Sharing resources in this post and the surveillance self-defense guides, and hosting your own workshops with the security education companion, are good first steps.

Who are my allies?

Cast a wide net of support; many members of faculty and staff may be able to provide forms of support to students, like institutional knowledge about school policies. Many school alumni are also invested in the reputation of their alma mater, and can bring outside knowledge and resources.

A number of non-profit organizations can also support protesters who face risks on campus. For example, many campus bail funds have been set up to support arrested protesters. The National Lawyers Guild has chapters across the U.S. that can offer Know Your Rights training and provide and train people to become legal observers (people who document a protest so that there is a clear legal record of civil liberties’ infringements should protesters face prosecution).

Many local solidarity groups may also be able to help provide trainings, street medics, and jail support. Many groups in EFF’s grassroots network, the Electronic Frontier Alliance, also offer free digital rights training and consultations.

Finally, EFF can help victims of surveillance directly when they email info@eff.org or Signal 510-243-8020. Even when EFF cannot take on your case, we have a wide network of attorneys and cybersecurity researchers who can offer support.

Beyond preparing according to your security plan, preparing plans with networks of support outside of the protest is a good idea.

Tips and Resources

Keep in mind that nearly any electronic device you own can be used to track you, but there are a few steps you can take to make that data collection more difficult. To prevent tracking, your best option is to leave all your devices at home, but that’s not always possible, and makes communication and planning much more difficult. So, it’s useful to get an idea of what sorts of surveillance is feasible, and what you can do to prevent it. This is meant as a starting point, not a comprehensive summary of everything you may need to do or know:

Prepare yourself and your devices for protests

Our guide for attending a protest covers the basics for protecting your smartphone and laptop, as well as providing guidance on how to communicate and share information responsibly. We have a handy printable version available here, too, that makes it easy to share with others.

Beyond preparing according to your security plan, preparing plans with networks of support outside of the protest is a good idea. Tell friends or family when you plan to attend and leave, so that if there are arrests or harassment they can follow up to make sure you are safe. If there may be arrests, make sure to have the phone number of an attorney and possibly coordinate with a jail support group.

Protect your online accounts

Doxxing, when someone exposes information about you, is a tactic reportedly being used on some protesters. This information is often found in public places, like "people search" sites and social media. Being doxxed can be overwhelming and difficult to control in the moment, but you can take some steps to manage it or at least prepare yourself for what information is available. To get started, check out this guide that the New York Times created to train its journalists how to dox themselves, and Pen America's Online Harassment Field Manual.

Compartmentalize

Being deliberate about how and where information is shared can limit the impact of any one breach of privacy. Online, this might look like using different accounts for different purposes or preferring smaller Signal chats, and offline it might mean being deliberate about with whom information is shared, and bringing “clean” devices (without sensitive information) to protests.

Be mindful of potential student surveillance tools

It’s difficult to track what tools each campus is using to track protesters, but it’s possible that colleges are using the same tricks they’ve used for monitoring students in the past alongside surveillance tools often used by campus police. One good rule of thumb: if a device, software, or an online account was provided by the school (like an .edu email address or test-taking monitoring software), then the school may be able to access what you do on it. Likewise, remember that if you use a corporate or university-controlled tool without end-to-end encryption for communication or collaboration, like online documents or email, content may be shared by the corporation or university with law enforcement when compelled with a warrant.

Know your rights if you’re arrested:

Thousands of students, staff, faculty, and community members have been arrested, but it’s important to remember that the vast majority of the people who have participated in street and campus demonstrations have not been arrested nor taken into custody. Nevertheless, be careful and know what to do if you’re arrested.

The safest bet is to lock your devices with a pin or password, turn off biometric unlocks such as face or fingerprint, and say nothing but to assert your rights, for example, refusing consent to a search of your devices, bags, vehicles, or home. Law enforcement can lie and pressure arrestees into saying things that are later used against them, so waiting until you have a lawyer before speaking is always the right call.

Barring a warrant, law enforcement cannot compel you to unlock your devices or answer questions, beyond basic identification in some jurisdictions. Law enforcement may not respect your rights when they’re taking you into custody, but your lawyer and the courts can protect your rights later, especially if you assert them during the arrest and any time in custody.

By combining their resources and expertise, the CPPA and CNIL aim to tackle a range of data privacy issues, from the implications of new technologies to the enforcement of data protection laws. This partnership is expected to lead to the development of innovative solutions and best practices that can be shared with other regulatory bodies around the world.

As more organizations and governments recognize the importance of safeguarding personal data, the need for robust and cooperative frameworks becomes increasingly clear. The CPPA-CNIL partnership serves as a model for other regions looking to strengthen their data privacy measures through international collaboration.

By combining their resources and expertise, the CPPA and CNIL aim to tackle a range of data privacy issues, from the implications of new technologies to the enforcement of data protection laws. This partnership is expected to lead to the development of innovative solutions and best practices that can be shared with other regulatory bodies around the world.

As more organizations and governments recognize the importance of safeguarding personal data, the need for robust and cooperative frameworks becomes increasingly clear. The CPPA-CNIL partnership serves as a model for other regions looking to strengthen their data privacy measures through international collaboration.

Source: security.apple.com[/caption]

At the heart of PCC is Apple's stated commitment to on-device processing. When Apple is responsible for user

Source: security.apple.com[/caption]

At the heart of PCC is Apple's stated commitment to on-device processing. When Apple is responsible for user  Source: X.com[/caption]

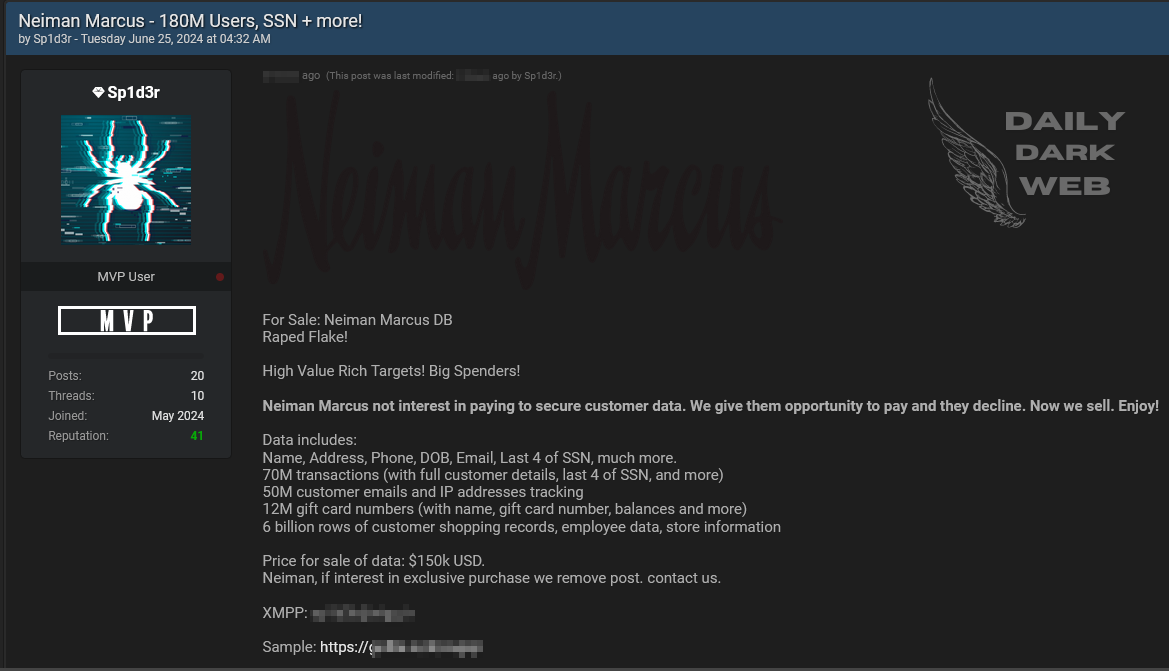

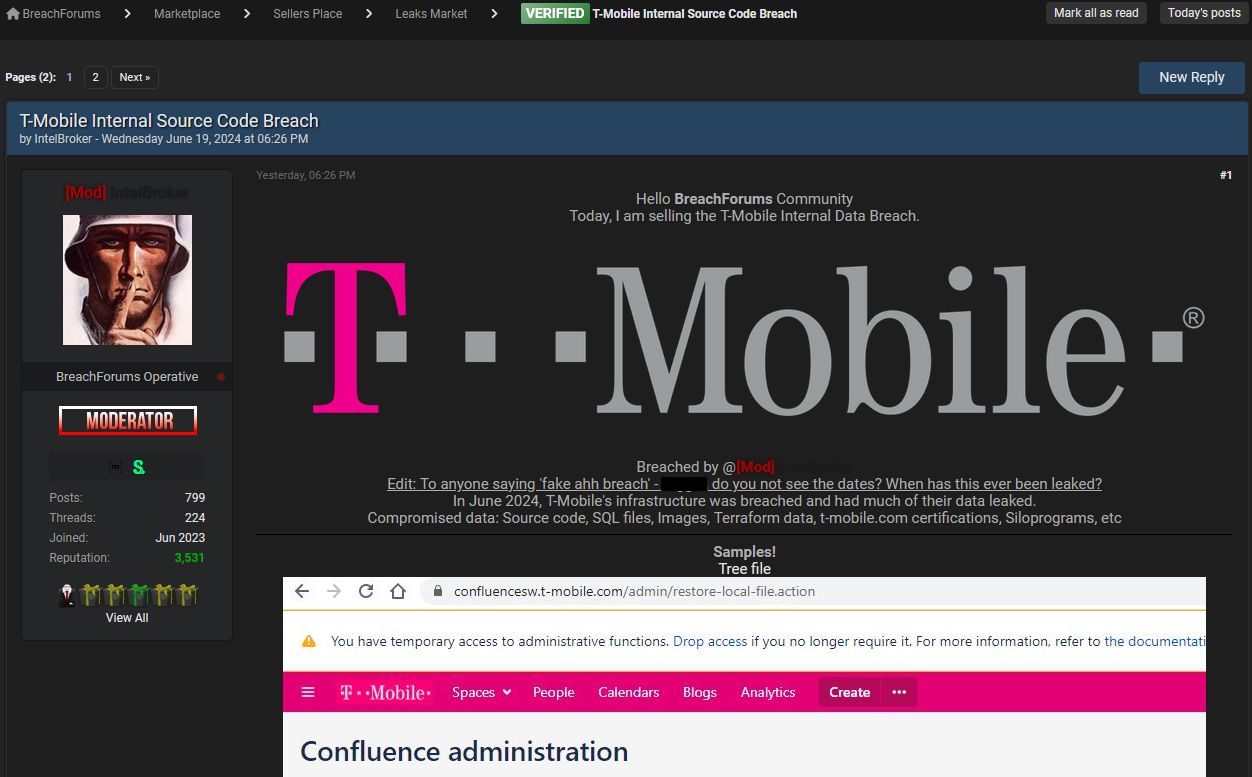

[caption id="attachment_76693" align="alignnone" width="418"]

Source: X.com[/caption]

[caption id="attachment_76693" align="alignnone" width="418"] Source: X.com[/caption]

[caption id="attachment_76695" align="alignnone" width="462"]

Source: X.com[/caption]

[caption id="attachment_76695" align="alignnone" width="462"] Source: X.com[/caption]

According to

Source: X.com[/caption]

According to