Like Shooting Phish in a Barrel

PHISHING SCHOOL

Bypassing Link Crawlers

You’ve just convinced a target user to click your link. In doing so, you have achieved the critical step in social engineering:

Convincing someone to let you in the door!

Now, we just have a few more technical controls that might get in the way of us reeling in our catch. The first of which is link crawlers.

What’s a Link Crawler?

Link crawlers, or “protected links” are one of the most annoying email controls out there. I don’t hate them because of their effectiveness (or ineffectiveness) at blocking phishing. I hate them because they are a real pain for end users and so many secure email gateways (SEGs) use them as a security feature these days. Here’s what they do, and why they suck:

What link crawlers do

They replace all links in an email with a link to their own web server with some unique ID to look up the original link. Then, when a user clicks a link in the email, they first get sent to purgatory… oops, I mean the “protected link” website. The SEG’s web server looks up the original link, and then sends out a web crawler to check out the content of the link’s webpage. If it looks too phishy, the user will be blocked from accessing the link’s URL. If the crawler thinks the page is benign, it will forward the user on to the original link’s URL.

Why link crawlers suck

First, have you ever had to reset your password for some web service you rarely use, and they sent you a one-time-use link to start the reset process? If your secure email gateway (SEG) replaces that link with a “safe link”, and has to check out the real link before allowing you to visit it, then guess what? You will never be able to reset your password because your SEG keeps killing your one-time-use links! I know that some link crawlers work in the opposite order (i.e. crawling the link right after the user visits) to get around this problem, but most of them click first, and send the user to a dead one-time-use URL later.

Second, these link replacements also make it so that the end user actually has no clue where a link is really going to send them. Hovering the link in an email will always show them a URL for the SEG’s link crawling service so there is no way the user can detect a masked link.

Third, I see these “security features” as really just an overt data grab by the SEGs that implement them. They want to collect valuable user behavior telemetry more than they really care about protecting them from phishing campaigns. I wonder how many SEGs sell this data to the devil… oops, I mean “marketing firms”. It all seems very “big brother” to me.

Lastly, this control is not even that hard to bypass. I think if link crawlers were extremely effective at blocking phishing attacks, then I would be more forgiving and able to accept their downsides as a necessary evil. However, that is not the case. Let’s go ahead and talk about ways to bypass them!

Parser Bypasses

Similar to bypassing link filter protections, if the SEG’s link parser doesn’t see our link, then it can’t replace it with a safe link. By focusing on this tactic we can sometimes get two bypasses for the price of one. We’ve already covered this in Feeding the Phishes, so I’ll skip the details here.

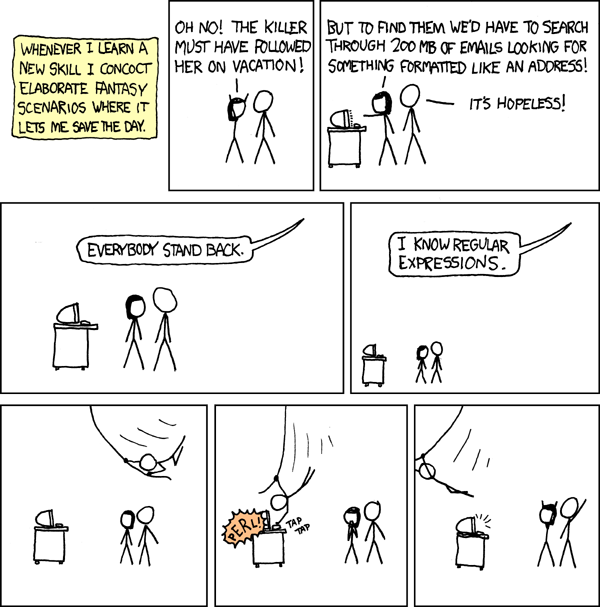

Completely Automated Public Turing test to tell Computers and Humans Apart (CAPTCHA)

Link crawlers are just robots. Therefore, to defeat link crawlers, we need to wage war with the robots! We smarty pants humans have employed CAPTCHAs as robot bouncers for decades now to kick them out of our websites. We can use the same approach to protect our phishing pages from crawlers as well. Because SEGs just want a peak at our website content to make a determination of whether it’s benign, there is no real motivation for them to employ any sort of CAPTCHA solver logic. For us, that means our CAPTCHAs don’t even have to be all that complicated or secure like they would need to be to protect a real website. I personally think this approach has been overused and abused by spammers to the point that many users are sketched out by them, but it’s still a valid link crawler bypass for many SEGs and a pretty quick and obvious choice.

Fun Fact (think about it)

Because the test is administered by a computer, in contrast to the standard Turing test that is administered by a human, CAPTCHAs are sometimes described as reverse Turing tests. — Wikipedia

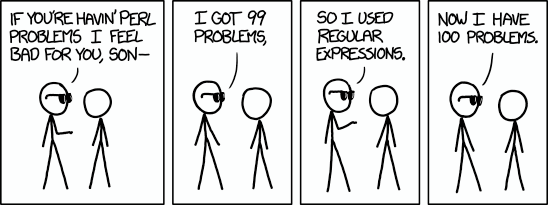

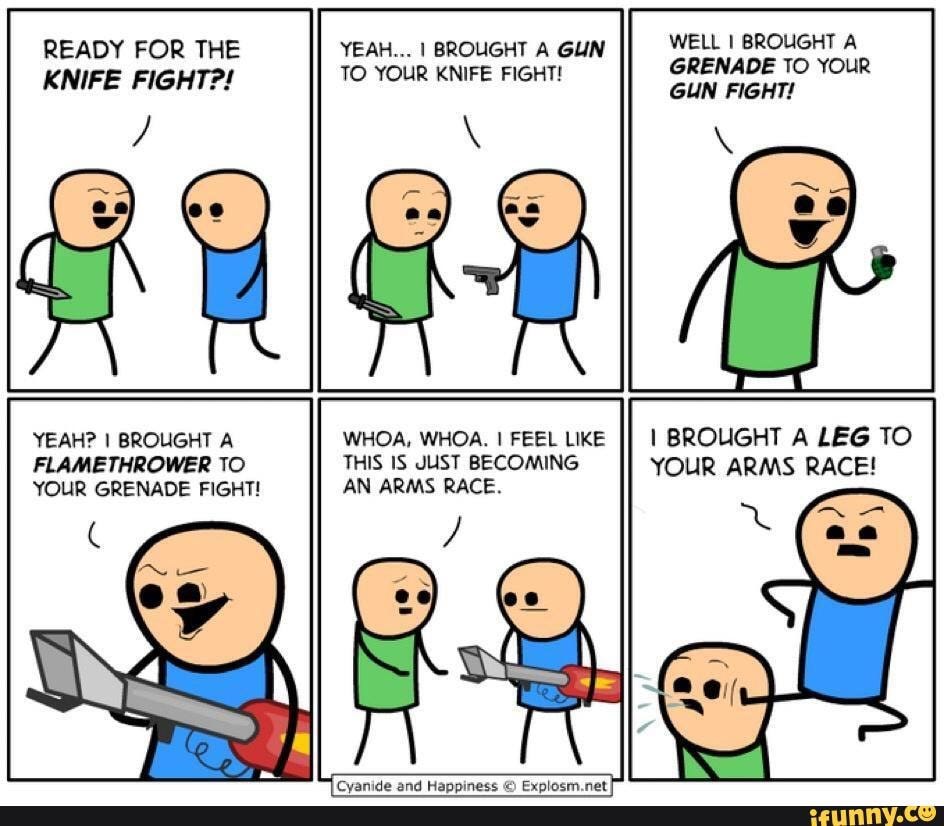

Join the Redirect Arms Race

Warning: I don’t actually recommend this, but it works, and therefore we have to talk about it. I’ll try to keep it brief.

Q: If you are tasked with implementing a link checker, how should you treat redirects like HTTP 302 responses?

A: They are common enough that you should probably follow the redirect.

Q: What about if it redirects again? This time via JavaScript instead of an HTTP response code. Should you follow it?

A: Yes, this is common enough too, so maybe we should follow it.

Q: But how many times?

A: ???

That’s up to you as the software engineer to decide. If you specify a depth of 3 and your script gets redirected 3 times, it’s going to stop following redirects and just assess the content on the current page. Phishers are going to redirect 4 times and defeat your bot. You’re going to up the depth, then they are going to up the redirects, and so on and so forth ad nauseam.

Welcome to the redirect arms race.

I see this “in the wild” all the time so it must work as a bypass, but it is annoying, and cliche, and kinda dumb in my opinion. So…

Please don’t join the redirect arms race!!!

Alert!

Link crawling bots tend to come in two different flavors:

- Content scraping scripts

- Automated browser scripts

The easiest to implement, and therefore the most common type of link crawler is just a simple content scraping script. You can think of these as being a scripted version of using a tool like cURL to pull a URL’s content and checking it for phishy language. These scripts might also look for links on the page and crawl each of them to a certain depth as well.

Because scrapers just read the content, they don’t actually execute any JavaScript like a web browser would. We can use this to our advantage by using JavaScript to detect actions that require user interaction, and then forward them on to the real phishing content. One very simple way of doing this is to include an “alert()” statement just before a “window.location” change. If we obfuscate the “window.location” change, then scraper will not see any additional content it needs to vet. When a human user clicks our link, and views our page in their browser, they will get a simple alert message that they have to accept, and then will be forwarded to the real phishing page. The content of the alert doesn’t matter, so we can use it to play into the wording of our pretext (e.g. “Downloading your document. Click Okay to continue.”).

Because it is simply too easy to defeat content scrapers with JavaScript, some SEGs have gone through the trouble of scripting automated browsers to crawl links. While the automated browser can execute JavaScript, emulating human interaction is still difficult. Once again, if we use an “alert()” function on our page, JavaScript execution will be blocked until the user accepts the message. In some cases, if the software engineer who wrote the script did not consider alert boxes, then their automated browser instance will be stuck on the page while the alert is asking for user input. If the software engineer does consider alerts, then they will likely stumble upon a common solution like the following:

browser.target_page.on('dialog', async dialog => {

await dialog.accept()

})

I myself have used this very technique to accept alerts when writing web scrapers for OSINT.

The problem with this solution is that it will accept the dialog (alert) immediately when it appears. A real human is going to need at least a second or two to see the alert, read it, move their mouse to the accept button, and click. If we set a timer on the alert box acceptance, we can pretty easily detect when a bot is accepting the alert and send them somewhere else. Few software engineers who are tasked with implementing these link crawler scripts will think this many moves in advance, and therefore even simple bypasses like this tend to work surprisingly well.

Count the Clicks

If we keep in mind that most link crawlers visit link URLs just before sending the user to the same URL, we can often defeat them by simply displaying a different set of content for the first click. The link crawler sees something benign and allows the user to visit, and that’s when we swap out the content. It is possible to achieve this directly in Apache using the ‘mod_security’ feature or in Nginx using the ‘map’ feature. Though it may be easiest in most cases to use a tool like Satellite to do this for you using the ‘times_served’ feature:

https://github.com/t94j0/satellite/wiki/Route-Configuration

I tend to prefer writing my own basic web servers for hosting phishing sites so I have more granular control over the data I want to track and log. It’s then pretty simple to either keep a dictionary in memory to track clicks or add a SQLite database if you are concerned about persisting data if you need to restart the server.

Browser Fingerprinting

One way to detect bots that is far less intrusive and annoying than CAPTCHAs is to use browser fingerprinting. The idea here is to use features of JavaScript to determine what type of browser is visiting your webpage. Browser fingerprinting is extremely common today for both tracking users without cookies, and defending against bots. Many automated browser frameworks like Selenium and Puppeteer have default behaviors that are easy to detect. For example, most headless browsers have ‘outerHeight’ and ‘outerWidth’ attributes set to zero. There are many other well known indicators that your site is being viewed by a bot. Here’s a nice tool if you would like to start tinkering:

https://github.com/infosimples/detect-headless

Another more extensive open source project that was designed for user tracking but can also be useful for this type of bot protection is FingerprintJS:

https://github.com/fingerprintjs/fingerprintjs

Most SEGs that implement link crawlers are not really incentivized to stay on the bleeding edge when it comes to hiding their bots. Therefore, in the vast majority of cases, we will be able to block SEG link crawlers with just open source tooling. However, there are also some really amazing paid options out there that specialize in bot protection and are constantly on the bleeding edge. For example, Dylan Evans wrote a great blog and some tooling that demonstrates how to thwart bots using Cloudflare Turnstile:

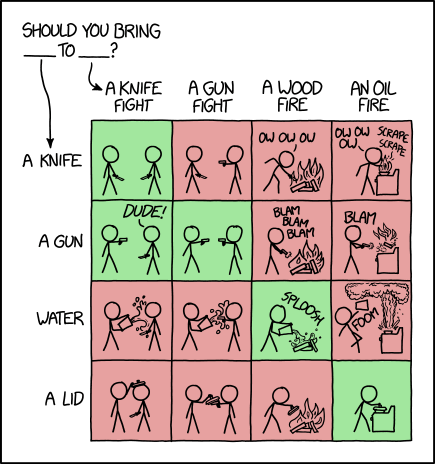

I would imagine just about any decent paid solution will thwart any SEG link crawlers you might encounter. It’s sort of like bringing a gun to a knife fight.

ASN Blocking

Autonomous system numbers (ASNs) are groups of IP ranges that belong to an entity or domain. In practice, every public IP address belongs to an ASN, and you can look up the organization that owns each ASN. Because most SEGs operate using a SAAS model, when their link crawler visits your site the traffic will originate from an ASN either owned by the SEG, by their internet service provider, or by a cloud computing service they use to run their software. In any case, the ASN will likely be consistent for a particular SEG, and will not be the same as the ASN associated with the IPs of your target users. If you collect IP and ASN data on your phishing sites, you can build a list of known-bad ASNs that you should block from viewing your real content. This can help you display benign content to SEG link crawlers, and will also help protect you from web crawlers in general that might put your domain on a blocklist.

Call Them

Once again, because link crawlers are an email control, we can bypass them by simply not using email as our message transport for social engineering. Calling from a spoofed number is easy and often highly effective.

Story of the Aggressive Link Crawler

Some SEGs actually crawl all links in an email, whether they are clicked by a user or not. The first time I encountered one, I thought I was having the most successful phishing campaign ever until I realized that my click rate was just under 100% (some messages must have bounced or something).

Luckily, I had already added a quick IP address lookup feature to Phishmonger at this point and I was able to see that the SEG was generating clicks from IPs all around the world. What they were doing was using an IP rotation service to make themselves harder to block and/or stop.

Also luckily, my client was only based and operating out of a single U.S. state. I was able to put a GeoIP filter on HumbleChameleon which I was using as my web server, and redirect the fake clicks elsewhere.

This worked well enough as a workaround in this scenario, but it would not work for bigger companies with a wider global footprint. I got lucky. Therefore, I think a better generalized bypass for this type of link crawler is to track the time between sending an email, and when the user clicks. These aggressive link crawlers are trying to vet the links in the email before the user has a chance to see and click them, so they will visit your links almost immediately (within seconds to a couple minutes) of receiving each email. They don’t have the luxury of being able to “jitter” their click times without seriously jamming the flow of incoming emails. Therefore, we can treat any clicks that happen within 2–3 minutes of sending the email as highly suspicious and either reject them (i.e. 404 and ask the user to come back later), or apply some additional vetting logic to make sure we aren’t dealing with a bot.

In Summary

Link crawlers, or “safe links” are going to allow SEGs to use bots to vet your phishing links before users are allowed to visit them. This control is annoying, but not very difficult to bypass. We just need to use some tried and true methods of detecting bots. There are some great open source and paid solutions that can help us detect bots and show them benign content.

SEGs aren’t properly incentivized to stay on the bleeding edge of writing stealthy web crawlers, so we can get some pretty good mileage out of known techniques and free tools. Just bring a leg to the arms race:

Finally, it’s always a good idea to collect data on your campaigns beyond just clicks, and to review that data for evidence of controls like link crawlers. If you aren’t tracking any browser fingerprints, or IP locations, or delivery-to-click times, then how can you say with any confidence what was a legitimate click versus a security product looking at your phishing page? Feedback loops are an essential part of developing and improving our tradecraft. Go collect some data!

Like Shooting Phish in a Barrel was originally published in Posts By SpecterOps Team Members on Medium, where people are continuing the conversation by highlighting and responding to this story.

The post Like Shooting Phish in a Barrel appeared first on Security Boulevard.