Why NASA and Boeing Are Being So Careful to Bring the Starliner Astronauts Home

© NASA, via Associated Press

© NASA, via Associated Press

Claimants include family of 11-year-old girl who spent three weeks on dialysis after eating chicken salad sandwich

Tesco and Asda are being sued by customers, including the family of an 11-year-old girl, who were left seriously ill after eating own-brand sandwiches linked to an outbreak of E coli.

The supermarkets face legal action after a child and adult were left in hospital. One person has been confirmed to have died and more than 120 others including a six-year-old have been hospitalised in the UK due to the bacteria.

Continue reading...© Photograph: Islandstock/Alamy

© Photograph: Islandstock/Alamy

Death in England linked to outbreak by officials, who say lettuce is the likely source of the illness

One person has died and more than 120 others including children as young as six have been hospitalised in the UK amid an E coli outbreak linked to lettuce.

Two people in England died within 28 days of infection with shiga toxin-producing E coli (Stec), the UK Health Security Agency (UKHSA) said in a briefing on Thursday.

Continue reading...© Photograph: LauriPatterson/Getty Images

© Photograph: LauriPatterson/Getty Images

Authorities say products contain ‘unapproved novel food ingredients’ as company Uncle Frog states ‘consuming the whole bag could … make people feel weird’

People have been hospitalised across Australia with symptoms including “disturbing” hallucinations, dizziness and involuntary twitching after ingesting mushroom gummies made by a Byron Bay business.

A South Australian teenage boy was found unresponsive earlier this month after consuming several of the Uncle Frog’s Mushroom Gummies, the state health department said on Thursday. He was treated and has since recovered.

Continue reading...© Photograph: Uncle Frog's

© Photograph: Uncle Frog's

© Cydni Elledge for The New York Times

© Cydni Elledge for The New York Times

Enlarge (credit: Aurich Lawson | Getty Images)

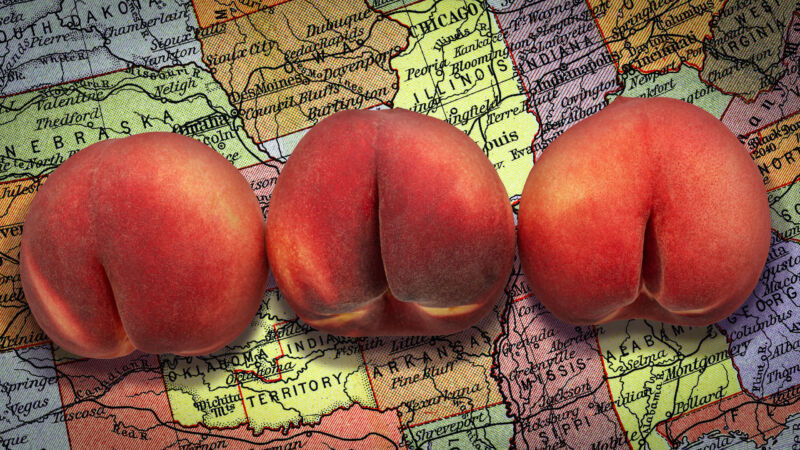

Pornhub will soon be blocked in five more states as the adult site continues to fight what it considers privacy-infringing age-verification laws that require Internet users to provide an ID to access pornography.

On July 1, according to a blog post on the adult site announcing the impending block, Pornhub visitors in Indiana, Idaho, Kansas, Kentucky, and Nebraska will be "greeted by a video featuring" adult entertainer Cherie Deville, "who explains why we had to make the difficult decision to block them from accessing Pornhub."

Pornhub explained that—similar to blocks in Texas, Utah, Arkansas, Virginia, Montana, North Carolina, and Mississippi—the site refuses to comply with soon-to-be-enforceable age-verification laws in this new batch of states that allegedly put users at "substantial risk" of identity theft, phishing, and other harms.

It’s not easy being a young person these days. School, friendships, social media — they’re all piling on pressure, and they all seem to intertwine. After a while, sometimes it helps just to take a break. This, in a nutshell, is what mental health days for students are all about. Not familiar with the concept? […]

The post A K-12 guide to mental health days for students appeared first on ManagedMethods.

The post A K-12 guide to mental health days for students appeared first on Security Boulevard.

© Walt Disney Pictures/AJ Pics, via Alamy

© Walt Disney Pictures/AJ Pics, via Alamy

Enlarge (credit: MirageC | Moment)

US Surgeon General Vivek Murthy wants to put a warning label on social media platforms, alerting young users of potential mental health harms.

"It is time to require a surgeon general’s warning label on social media platforms stating that social media is associated with significant mental health harms for adolescents," Murthy wrote in a New York Times op-ed published Monday.

Murthy argued that a warning label is urgently needed because the "mental health crisis among young people is an emergency," and adolescents overusing social media can increase risks of anxiety and depression and negatively impact body image.

Enlarge (credit: Francesco Carta fotografo | Moment)

An Indiana cop has resigned after it was revealed that he frequently used Clearview AI facial recognition technology to track down social media users not linked to any crimes.

According to a press release from the Evansville Police Department, this was a clear "misuse" of Clearview AI's controversial face scan tech, which some US cities have banned over concerns that it gives law enforcement unlimited power to track people in their daily lives.

To help identify suspects, police can scan what Clearview AI describes on its website as "the world's largest facial recognition network." The database pools more than 40 billion images collected from news media, mugshot websites, public social media, and other open sources.

Source: security.apple.com[/caption]

At the heart of PCC is Apple's stated commitment to on-device processing. When Apple is responsible for user data in the cloud, we protect it with state-of-the-art security in our services," the spokesperson explained. "But for the most sensitive data, we believe end-to-end encryption is our most powerful defense."

Despite this commitment, Apple has stated that for more sophisticated AI requests, Apple Intelligence needs to leverage larger, more complex models in the cloud. This presented a challenge to the company, as traditional cloud AI security models were found lacking in meeting privacy expectations.

Apple stated that PCC is designed with several key features to ensure the security and privacy of user data, claiming the following implementations:

Source: security.apple.com[/caption]

At the heart of PCC is Apple's stated commitment to on-device processing. When Apple is responsible for user data in the cloud, we protect it with state-of-the-art security in our services," the spokesperson explained. "But for the most sensitive data, we believe end-to-end encryption is our most powerful defense."

Despite this commitment, Apple has stated that for more sophisticated AI requests, Apple Intelligence needs to leverage larger, more complex models in the cloud. This presented a challenge to the company, as traditional cloud AI security models were found lacking in meeting privacy expectations.

Apple stated that PCC is designed with several key features to ensure the security and privacy of user data, claiming the following implementations:

"Security researchers need to be able to verify, with a high degree of confidence, that our privacy and security guarantees for Private Cloud Compute match our public promises. We already have an earlier requirement for our guarantees to be enforceable. Hypothetically, then, if security researchers had sufficient access to the system, they would be able to verify the guarantees."However, despite Apple's assurances, the announcement of Apple Intelligence drew mixed reactions online, with some already likening it to Microsoft's Recall. In reaction to Apple's announcement, Elon Musk took to X to announce that Apple devices may be banned from his companies, citing the integration of OpenAI as an 'unacceptable security violation.' Others have also raised questions about the information that might be sent to OpenAI. [caption id="attachment_76692" align="alignnone" width="596"]

Source: X.com[/caption]

[caption id="attachment_76693" align="alignnone" width="418"]

Source: X.com[/caption]

[caption id="attachment_76693" align="alignnone" width="418"] Source: X.com[/caption]

[caption id="attachment_76695" align="alignnone" width="462"]

Source: X.com[/caption]

[caption id="attachment_76695" align="alignnone" width="462"] Source: X.com[/caption]

According to Apple's statements, requests made on its devices are not stored by OpenAI, and users’ IP addresses are obscured. Apple stated that it would also add “support for other AI models in the future.”

Andy Wu, an associate professor at Harvard Business School, who researches the usage of AI by tech companies, highlighted the challenges of running powerful generative AI models while limiting their tendency to fabricate information. “Deploying the technology today requires incurring those risks, and doing so would be at odds with Apple’s traditional inclination toward offering polished products that it has full control over.”

Media Disclaimer: This report is based on internal and external research obtained through various means. The information provided is for reference purposes only, and users bear full responsibility for their reliance on it. The Cyber Express assumes no liability for the accuracy or consequences of using this information.

Source: X.com[/caption]

According to Apple's statements, requests made on its devices are not stored by OpenAI, and users’ IP addresses are obscured. Apple stated that it would also add “support for other AI models in the future.”

Andy Wu, an associate professor at Harvard Business School, who researches the usage of AI by tech companies, highlighted the challenges of running powerful generative AI models while limiting their tendency to fabricate information. “Deploying the technology today requires incurring those risks, and doing so would be at odds with Apple’s traditional inclination toward offering polished products that it has full control over.”

Media Disclaimer: This report is based on internal and external research obtained through various means. The information provided is for reference purposes only, and users bear full responsibility for their reliance on it. The Cyber Express assumes no liability for the accuracy or consequences of using this information.

Enlarge (credit: RicardoImagen | E+)

Photos of Brazilian kids—sometimes spanning their entire childhood—have been used without their consent to power AI tools, including popular image generators like Stable Diffusion, Human Rights Watch (HRW) warned on Monday.

This act poses urgent privacy risks to kids and seems to increase risks of non-consensual AI-generated images bearing their likenesses, HRW's report said.

An HRW researcher, Hye Jung Han, helped expose the problem. She analyzed "less than 0.0001 percent" of LAION-5B, a dataset built from Common Crawl snapshots of the public web. The dataset does not contain the actual photos but includes image-text pairs derived from 5.85 billion images and captions posted online since 2008.

© IMAGO/OceanGate Expeditions, via Alamy

© Jason Henry for The New York Times

© Jim Wilson/The New York Times

© Mark Abramson for The New York Times

© Hannah Yoon for The New York Times