The Summit 1 is not peak e-mountain bike, but it’s a great all-rounder

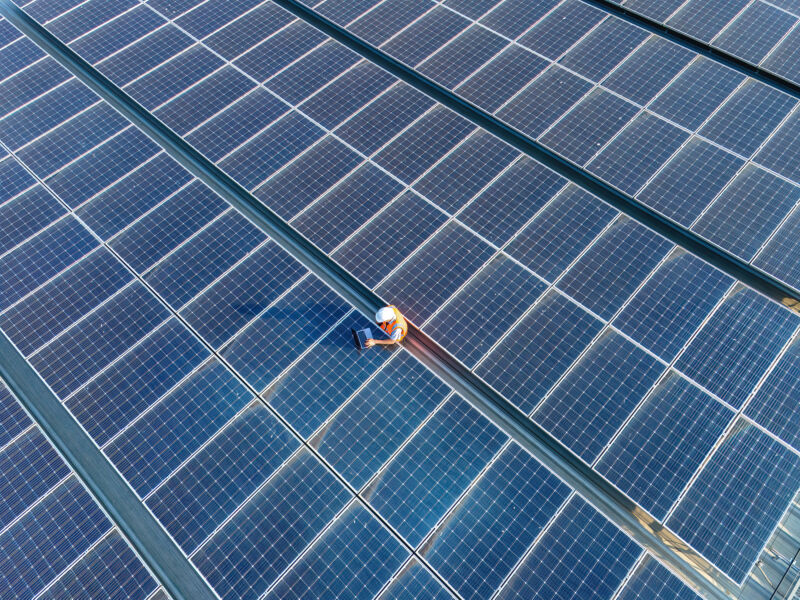

Enlarge (credit: John Timmer)

As I mentioned in another recent review, I've been checking out electric hardtail mountain bikes lately. Their relative simplicity compared to full-suspension models tends to allow companies to hit a lower price point without sacrificing much in terms of component quality, potentially opening up mountain biking to people who might not otherwise consider it. The first e-hardtail I checked out, Aventon's Ramblas, fits this description to a T, offering a solid trail riding experience at a price that's competitive with similar offerings from major manufacturers.

Velotric's Summit 1 has a slightly different take on the equation. The company has made a few compromises that allowed it to bring the price down to just under $2,000, which is significantly lower than a lot of the competition. The result is something that's a bit of a step down on some more challenging trails. But it still can do about 90 percent of what most alternatives offer, and it's probably a better all-around bicycle for people who intend to also use it for commuting or errand-running.

Making the Summit

Velotric is another e-bike-only company, and we've generally been impressed by its products, which offer a fair bit of value for their price. The Summit 1 seems to be a reworking of its T-series of bikes (which also impressed us) into mountain bike form. You get a similar app experience and integration of the bike into Apple's Find My system, though the company has ditched the thumbprint reader, which is supposed to function as a security measure. Velotric has also done some nice work adapting its packaging to smooth out the assembly process, placing different parts in labeled sub-boxes.